Making Sense of Millions of Data Points

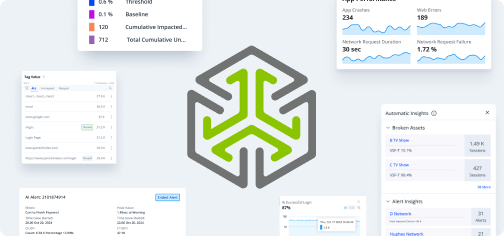

The key to delivering optimal experiences to every user is to comprehensively measure and react to changes in their Quality of Experience in real-time. But, that can mean scanning millions of unique user data points to identify anomalies, detect patterns, and address the root causes of issues. It’s becoming more difficult for operations teams to keep up with the speed and scale of user data they need to analyze. That’s where AI comes in.

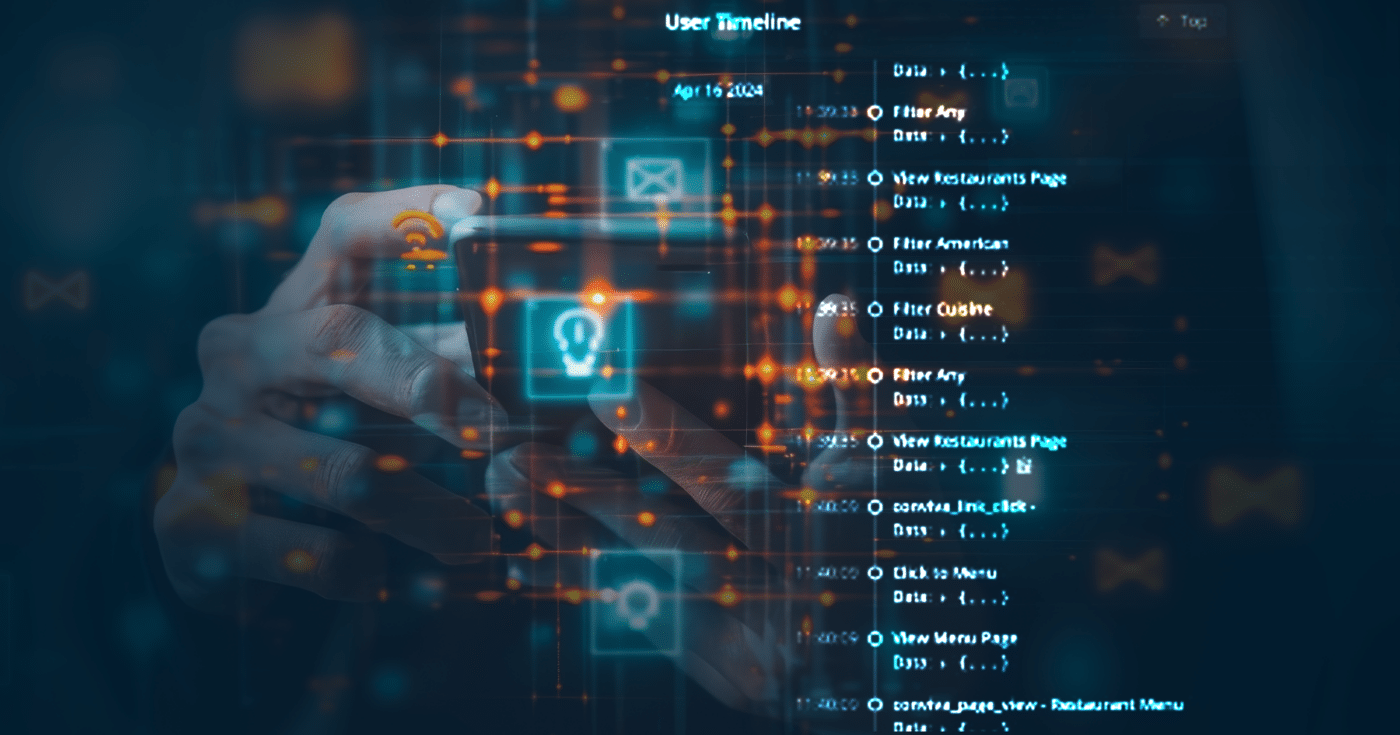

Visualizing the User Journey with User Timeline AI Summary

User Timeline is a feature that allows you to see all the events of a user’s actions and of the digital application in a given timeframe. You can access the User Timeline either by searching a hashed GUID based on your integration, or by drilling down directly from investigating metrics in Trends, Real-Time, or Alerts Dashboards.

This feature gives you a visual representation of how a user journeys through your application, and can give you deeper insights into issues they might have faced along the way. It eliminates noise and helps you zoom into the experience of one user so you can address their experience challenges directly.

The AI Summary is a new feature recently released which summarizes the events for a set of users into a readable story about what happened. Our LLM is perfectly designed to deliver these insights because it is a repeated task to compress and comprehend the event timeline into a story about a user’s experience that can lead to action. This helps to understand the user experience and therefore improve engagement. This can be a particular challenge for many LLMs because, whereas a LLM really thrives at patterns recognition, we actually wanted to highlight and accentuate the unexpected events that do not match the typical user timeline patterns because that is what is more likely to be interesting or indicative of some issue, error, or user behavior divergence.

Swimming in Data: How To Become An Expert In A Massive Dataset

Becoming an expert in a massive dataset is all about diving in and looking at as many low-level examples as you can, non-aggregated. It’s where you can better familiarize yourself with extremely large datasets by starting to immerse yourself in the data, one customer, user, session, or datapoint at a time. Data immersion is a great strategy to familiarize new users with extremely large datasets but it is extremely time consuming. AI can help make the datasets more digestible and comprehensible.

The more we can convert machine data into a story, the easier it becomes to start to ingest what’s actually happening within that data of a user timeline. Then it becomes easier to interpret the network requests into what the user was actually doing or experiencing within that timeline journey.

For many of the main use cases for QoE data, you start by looking at the outliers and anomalies, which gets you accustomed to seeing user timelines and journeys that are actually abnormal, until you no longer know what normal looks like. I remember the first time I ever looked at Conviva data, almost six years ago, when I had to pause and realize that I didn’t know why the data looked abnormal because I didn’t know what normal looked like. This leads us to our second use case: training others to use this data.

Practical Applications of User Timelines and AI Summary

Accelerating Onboarding

It is much easier to train people to read and understand data when it is human-readable, making it easier to train others on how to use data under specific conditions, especially if they have never looked at Conviva data before. With User Timelines, new team members can quickly understand how Conviva measures QoE down to the individual user. They can begin to understand how the comprehensive, contextual data analysis that can be done with the Conviva Operational Data Platform is an aggregated view of the journeys and actions taken by millions of unique users.

Accelerating Customer Care Resolution

Many Conviva customers use User Timelines in their call centers to arm their agents with the right data to solve any inquiry they might receive. By accurately matching what the user was experiencing, the agent in the call center can have a real conversation with the customer about their problem, leading to faster resolution. We’ve seen call centers that were able to reduce the time for technical troubleshooting by seven minutes on average.

For agents in a call center, many of whom aren’t trained in computer science or networking, reading more raw data in a timeline is daunting, draining, and time-consuming. AI is better at picking out patterns and summarizing the user experience into a timeline that is much more understandable and usable for the agent, allowing them to reach a resolution with the customer via phone, chat, or email much faster.

Improving Quality of Experience For Every User

Improving QoE at the user level involves drilling down into the data when you have an anomaly you want to investigate. The user timeline helps to understand what the problem is. A major use case is from our AI alerts, where we trigger real-time anomalies. For Network Operations Centers, this involves determining if a metric is spiking, what is broken, why, and if there is any customer impact. They need to act as quickly as possible because every minute something is broken, users can become frustrated and may try to contact call centers or even cancel their subscription. By examining a few user timelines, you can determine if this is a repeat issue, if the user is repeatedly encountering the same problem despite attempted workarounds, or if it’s a one-off issue that the user can resolve themselves. These are two very different types of incidents with different resolutions and escalation needs. Being able to use an AI summary to quickly identify the type of user experience makes it much easier to determine the severity of the incident and assign it correctly. The AI summary also makes it easier to pick out specific workflows that may be causing the issue.

Resolving Long-Term Baseline Problems

Looking at the user timeline and utilizing an AI summary can be helpful in assessing the root cause of a problem. It’s all about pattern recognition, identifying similarities in the user experience, and determining how impactful that experience really is. When you can turn machine data into a readable story about the user experience, it becomes much more tangible.

Centralizing Data Analysis

Making sense of a very raw, daunting and large set of timeline data is problematic not just for non-engineers and call center use cases, but for anyone trying to analyze data. AI gives team members of all technical levels the clarity to know what data points are most relevant to the challenges they’re trying to solve. From product teams conducting long term analysis on feature usage to teams of developers trying to debug quality issues in real-time, all teams benefit from the power of AI. Instead of digging through endless, disparate data sets, anyone can leverage AI to surface the most critical trends and data sets that will have the biggest impact on their KPIs.

Data is king, but examples bring it to life.

The more you can collaborate with teams, including engineers and users of the product, the easier it will be to develop that AI product to function as desired. The most important focus should be on understanding how people are really using the tool before you integrate AI to enhance its functionality. As AI becomes ever more prevalent, it is important to remember that many use cases are more nuanced and ambiguous from repeated mental tasks (like reading), not discrete system actions.

Have you used this AI Summary feature or any other summary feature produced by LLM? What did you or didn’t you like about it? What do you think about using AI for data interpretation? Where do you think LLMs can have even more benefit in the world of Experience Centric Operations? Reach out to get started today!