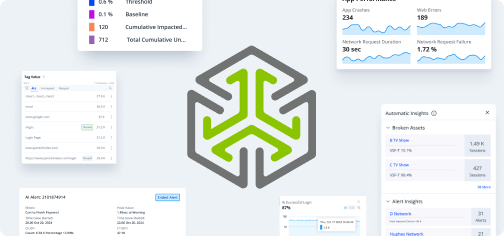

Experience-centric operational analytics requires more than just business analytics or sample-level analytics. It requires companies to do census-level measurement of vast datasets and do both real-time monitoring and operational analytics on high cardinality, live, and historical data to detect unknown issues, conduct root cause identification, and surface areas for improvement.

Historically, the costs and resources required to do this type of analytics in real-time and at scale have been prohibitive. Until now, it’s been cost and resource prohibitive to do census-level, stateful computation because all existing solutions are based on SQL data structures and adapting them to do so comes at an exorbitant cost and requires significant resources.

Time-State Analytics: Unlocking the Key to Experience-Centric Analysis

Conviva has pioneered a new data analytics paradigm called Time-State Analytics that enables companies do experience-centric operational analytics at census-level, at scale, and at 10X lower the cost and 10X faster than they can today.

Customers get the benefit of native unification and synchronization of all their data sets across systems, which connects cause and effect across system performance, user experience, and user engagement in real-time. Because it’s capable of analyzing patterns across infrastructure, device, and user behavior over time, entire processes from detecting issues, finding their root causes, and spotting opportunities to improve operations at a massive scale and with high precision can happen in minutes, end-to end.

We’ve built a new computational model, query language, and unified infrastructure inside our Operational Data Platform to support Time-State Analytics.

We first presented our approach to Time-State Analytics when Conviva was accepted to present our paper, Raising the Level of Abstraction for Time-State Analytics with the Timeline Framework, at the Conference on Innovative Data Systems Research (CIDR) in Amsterdam, January 2023.

Where Traditional Data Processing Falls Flat

The discipline of Time-State Analytics involves modeling context-sensitive metrics computed over continuously evolving states across any system.

Existing data processing systems, including streaming systems, time-series databases, SQL extensions, and batch systems, are not suitable for addressing time-state analytics. These systems don’t provide good abstractions for modeling processes that evolve continuously over time. They also result in poor cost-performance tradeoffs and significant development complexity.

The most common solutions are SQL based, and they are powerful for certain types of data management and analysis. But, there are several reasons why they are not inherently optimized for the stateful computation required in Time-State Analytics:

- Tabular Structure: SQL databases and tabular solutions are designed to store data in rows and columns. While this structure is excellent for querying and aggregating individual data points, it is not well-suited for representing and analyzing data over time with changing states.

- Lack of Native Time-Related Features: SQL lacks built-in features for representing and analyzing temporal data, which is essential for tracking changes over time and maintaining the state of data at different points in time.

- Complexity of State Transitions: Stateful computation often involves tracking and modeling complex state transitions or sequences of events. With SQL, joining and analyzing data based on events happening at different times requires constant querying, creating latency at scale.

- Limited Pattern Matching: SQL does provide some basic pattern matching functionality using the LIKE operator and wildcard characters, but complex pattern mining and analysis require more advanced functionalities that SQL does not support.

- Performance Considerations: SQL databases are optimized for efficient data retrieval and processing, primarily through indexing and query optimization techniques. However, stateful computation often involves computationally intensive operations that go beyond the capabilities of SQL-based systems, resulting in slower performance.

- Data Volume and Scalability: As data volume grows, stateful computation in SQL can become increasingly inefficient and costly. Joining data from multiple tables or maintaining an infinite table to capture every state change over time is not practical in most scenarios

- Continuous Unification of Data: Stateful computation requires continuous unification of data from various sources and real-time and historical data. SQL databases often operate in a batch mode, which is not designed for real-time, continuous analysis of data changes.

- High-Level Abstraction Requirement: Stateful computation demands a higher-level abstraction that natively understands time and data changes over time. SQL, being a lower-level abstraction, requires extensive coding and instructions to handle complex stateful scenarios effectively.

- Complex Queries and Tradeoffs: SQL-based systems often require complex queries to handle stateful computations. These queries might involve tradeoffs between granularity, efficiency, and accuracy, leading to potential blind spots and false negatives in the analysis.

Why Time-State Analytics Is the Future Of Data Analysis

To address these challenges, our technology raises the level of abstraction to support time-state analytics workloads by introducing a new abstraction called Timelines. Timelines can model dynamic processes more directly and express the requirements of time-state analytics easily and intuitively. Timelines offer a more efficient way to write queries compared to conventional approaches such as streaming systems, time-series databases, and time-based extensions to SQL. To support this, we’ve codified the concept of Timeline Algebra, which defines operations over three basic Timeline Types: state transitions, discrete events, and numerical values.

Conviva’s Time-State Technology can compute stateful, context-rich metrics in real-time and perform flexible aggregation and filtering directly on this data enabling a full integration of monitoring and analytics in a single platform.

Beyond video streaming operations, Conviva’s Time-State Technology can be applied to various industries, from cybersecurity, e-commerce, sports performance, healthcare, IoT, and many more.

Some common applications of time-state analytics include:

- Financial Analysis: Analyzing historical stock prices, currency exchange rates, or other financial data to identify trends and patterns that can help predict future market movements.

- Predictive Maintenance in IoT: Analyzing sensor data from industrial equipment or machinery to detect patterns that indicate potential failures or maintenance needs, allowing for proactive maintenance planning.

- Healthcare Analysis: Analyzing historical health records and disease outbreak data to identify patterns and trends in disease prevalence, spread, and impact, which can inform public health policies and interventions.

- Sports Performance Analysis: Analyzing performance data of athletes, such as heart rate, speed, and skill metrics, to evaluate performance trends, identify strengths and weaknesses, and optimize training programs.

Time-State Analytics solves these problems and sits at the intersection of observability and analytics and allows users to identify and solve problems faster than they’ve ever been able to do and alert them of issues they didn’t even know they should be monitoring for.

As data volumes and data complexity continue to grow, time-state analytics simplifies analysis by modeling real-time system performance, user experience, and user engagement, correlated and in full context. No more synchronizing disparate data sets and executing complex SQL code to transform tabular data into time-series data – and still ending up with gaps in your measurement. Conviva’s approach with time-state analytics delivers actionable, contextual data that teams can instantly take action on and trust to make informed business decisions.