Your business needs Experience-Centric Operations

While Observability and Application Performance Monitoring (APM) tools have transformed how engineering and operations teams function over the last ten years, there are several challenges that every VP of Ops and CTO still face with no clear solution in sight:

- Being surprised by customer issues on social media, from customer support calls, or through the CEO, even when their tools say everything is fine.

- Needing an army of expert engineers to debug whenever a major incident happens.

- Exploding costs of observability tools to monitor their growing infrastructure.

In our previous entry from this series, we established how operations need to evolve from focusing on low-level system performance to higher-level user experience to solve these problems for businesses. Keep reading the second entry in this series to discover how and why existing observability tools fail to bridge the performance-experience gap.

Observability Tools Cannot Bridge the Experience Disconnect

Today’s monitoring tools focus almost exclusively on backend servers and applications. Unfortunately, this means ops teams are completely disconnected from the user experience, leaving them guessing in the dark and with no context on how to prioritize. When an escalation comes in because users can’t sign up, an army of expert engineers must be brought in to find the issue, interrupting their high value work. Since there is no clear path through the data to the root cause, even the engineers are reduced to guesswork and scouring all the systems in the path of sign up for some solution. This is slow, expensive, and disruptive. Once they do find a probable cause, make some time, and fix it, they still don’t know for sure if they addressed the real problem since there is no direct validation of user experience.

The disconnect from user experience and the need to find a way to measure it directly is a known problem for companies, and observability tools have been trying to address it with two approaches.

- By ingesting more data from backend servers and applications, observability solutions hope to capture a higher percent of user-impacting issues. This means capturing more logs, more metrics, and introducing traces or capturing more traces. This bloat has led to higher costs for companies, without ultimately solving their problem: it is simply not possible to understand experience from backend sources alone.

- Observability solutions introduced Real User Monitoring (RUM) tools to try to capture user experience. RUM unfortunately falls noticeably short in solving the problem, as the tools are built on severely limited technology, which is expensive to use, lacking in functionality, and only capable of running on a small sample of users. Read more about the limitations of RUM tools here.

Neither of these costly approaches is capable of solving the performance-experience disconnect. It continues to be a major stress point for companies because, despite the human, technological, and financial resources being thrown at the problem, surprise escalations are still happening every day, stealing expert engineers away from innovation for the business.

What’s the problem with legacy observability tools?

The biggest drawback is fundamental: today’s solutions cannot compute true experience metrics in real-time because they simply do not have the foundational technology needed for the task. All these tools are built merely to count events, such as crashes, errors, page loads, etc.—and even this they struggle to accomplish at scale.

Experience, however, cannot be computed as a simple count of events. Understanding the complexities of the user journey requires us to compute complex metrics based on timing, time intervals, sequences, and state. We refer to this entire process as stateful analytics or a metric based on this as a stateful metric.

Let’s look at this more in-depth:

A count of errors is a stateless metric. It does not depend on understanding sequences, time intervals, or state.

Time to Sign Up is a stateful metric (granted, a fairly simple one, but RUM tools cannot even compute this). It can be considered stateful because the monitoring system must identify the start and successful completion of sign-up for a particular session (a process we call sessionization), then compute the time difference between the two for each session, then aggregate them across sessions—all in real-time.

We can introduce a new level of complexity to the Time to Sign Up metric by excluding any time spent outside the app. Let’s say the user received a text while signing up, so they spent a minute in their messaging app responding to it in the middle of the sign-up process. This minute should be excluded from the Time to Sign Up metric. You can see how the user session rapidly becomes much more complex than simply calculating time between two events.

Maybe you’re asking why you couldn’t simply compute the Sign Up metric in the client and send it as an event. While this may sound feasible at first, it does not work in practice, because computing all the relevant stateful metrics in the client across all device types and variations of user flows and versions and maintaining this over time creates too high an overhead, leading to inaccurate metrics and lack of trust while deteriorating client performance. Even when companies commit to this approach with all seriousness, they are quickly forced to abandon it, leaving the ops team where it began—stuck with low-level performance visibility and no understanding of the user experience.

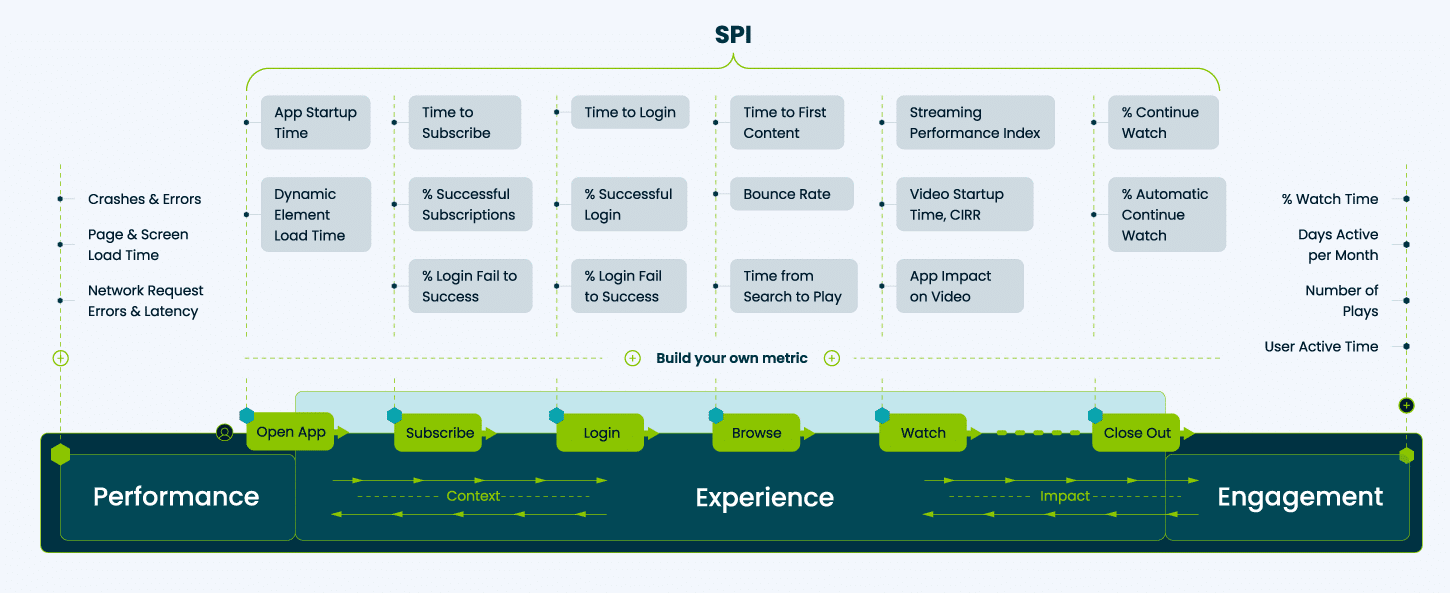

The illustration below shows what we mean by experience metrics for a video streaming app. On the left are low-level performance metrics (the focus of RUM tools). In the middle are critical experience metrics, categorized by each part of the user flow. On the right are engagement metrics (the focus of product analytics). A comprehensive understanding of user experience requires measuring all three in real-time in a connected manner:

- Engagement reflects outcomes that matter for the business. It helps us understand the impact of experience and define what a good experience is.

- Performance helps to diagnose why we have an experience issue.

- Experience is the connection between performance and engagement. It is the most important missing piece in every business today and it cannot be supplied by today’s observability tools.

Experience-Centric Unified Monitoring & Analytics

Transform critical experiences, performance, and engagement into real-time operational metrics